7 Real-World Use Cases for Kimi K2.5: What Developers Are Actually Building

7 min read

Bad definitions break AI chatbots

You've invested months building your AI chatbot. Your team has collected thousands of customer conversations, labeled intents, trained models, and fine-tuned parameters. The demo looks perfect. Leadership is excited.

Then you launch.

Within days, customers are complaining. The bot misunderstands simple requests. It confidently provides wrong answers. Support tickets are piling up faster than before the bot existed. Your team is scrambling to figure out what went wrong.

The problem isn't your model architecture. It's not your deployment pipeline. It's something far more fundamental that most teams overlook entirely: your training data was built on a foundation of ambiguous definitions.

Here's a scenario that plays out in companies every single day:

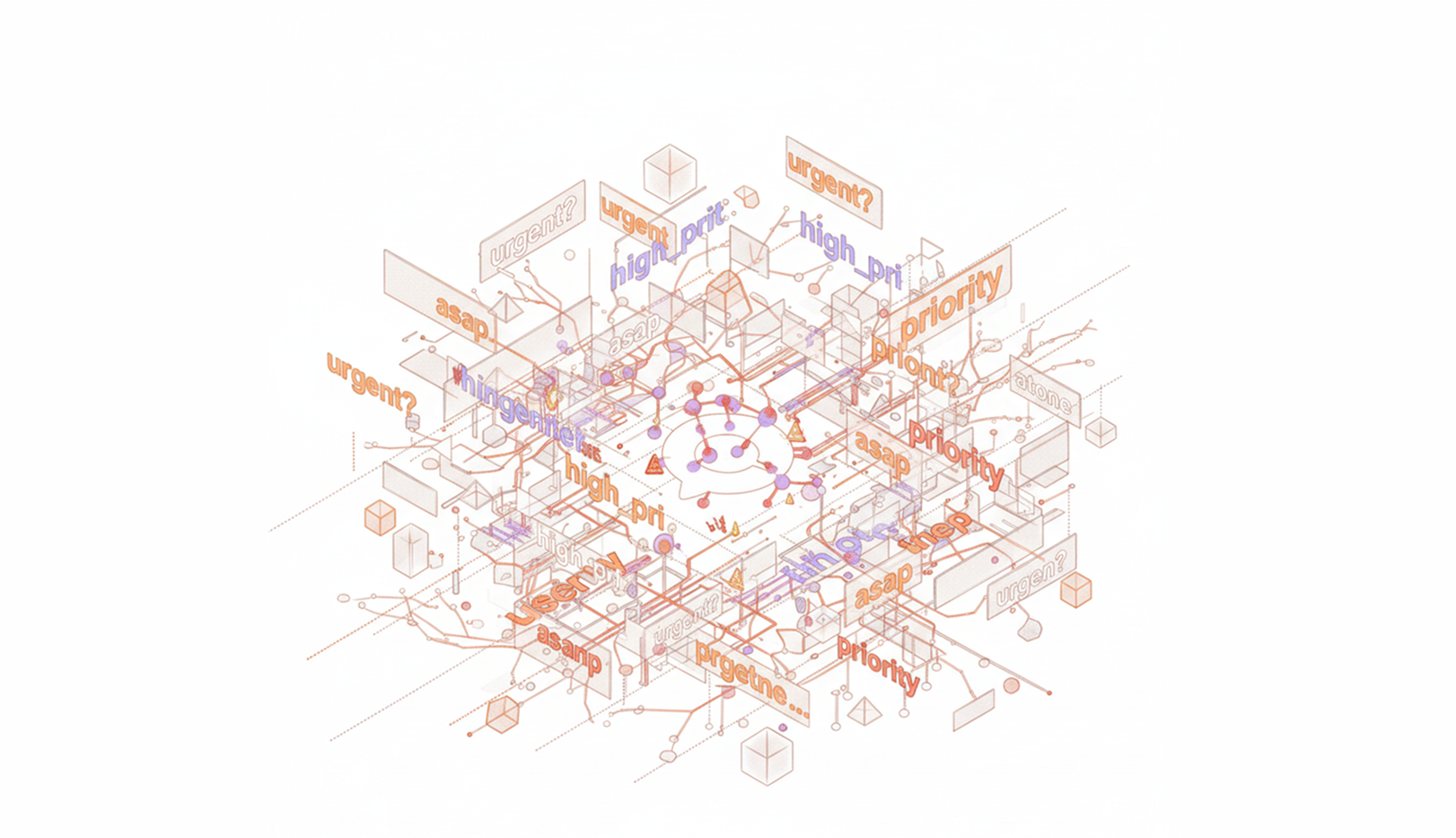

Your team is labeling customer service conversations to train a chatbot. One labeler sees "I need this ASAP" and tags it as "urgent_request." Another labeler sees "I need this by end of week" and also tags it "urgent_request." A third sees "This is time-sensitive" and creates a new tag: "high_priority."

Three different interpretations. Three different labels. One massive problem.

When your model trains on this inconsistent data, it learns contradictory patterns. The result? A chatbot that can't reliably distinguish between truly urgent issues and routine requests. Customer frustration skyrockets. Your support team loses trust in the AI. The project that was supposed to reduce costs is now consuming more resources than ever.

This isn't a hypothetical. A 2024 study found that 67% of enterprise AI failures can be traced back to data quality issues, with inconsistent labeling being the leading culprit.

Let's put real numbers to this problem.

Imagine you're building a chatbot for an e-commerce company. You need to train it to understand product categories. Your team starts labeling:

Without clear definitions:

You end up with four different labels for the same product category. Your model now needs 4x more training examples to learn what should have been one consistent pattern. That's 4x the labeling cost, 4x the training time, and a model that's still less accurate than it should be.

The cascade effect:

Total first-year cost of undefined terms: $245,000+

And that's just the direct costs. You're not accounting for:

These are terms everyone on your team uses, but nobody has actually defined.

Example: "Customer intent"

Ask five people on your AI team what "customer intent" means and you'll get five different answers:

Without a clear, documented definition, every downstream decision becomes inconsistent. Your annotators label differently. Your model learns differently. Your chatbot behaves unpredictably.

Your business changes. Your products evolve. Your customers' language shifts. But your definitions remain frozen in time.

Example: "Product return"

Version 1.0 (Launch): A customer ships an item back for a refund

Version 2.0 (6 months later): Company adds exchanges - now "returns" include both refunds AND exchanges, but the definition was never updated

Version 3.0 (1 year later): Company adds digital products that can't be "returned" physically - now there are refund requests without physical returns

Your chatbot was trained on Version 1.0 definitions but is now handling Version 3.0 reality. The model has no idea that "return" has evolved. Accuracy plummets. Nobody knows why.

Some terms inherently require human judgment, but without clear criteria, that judgment becomes arbitrary.

Example: "Angry customer"

Annotator A's interpretation: Uses profanity or all caps

Annotator B's interpretation: Expresses any dissatisfaction

Annotator C's interpretation: Explicitly threatens to cancel or leave negative review

Three annotators, three completely different thresholds for the same label. Your sentiment analysis model is now trained on fundamentally incompatible data. It will never be consistently accurate because the ground truth itself is inconsistent.

The rise of large language models hasn't solved this problem - it's actually made it worse in new ways.

Customer language evolves faster than ever. Your 2023 training data defined "AI assistant" one way. By 2024, customers are using completely different terminology. Your definitions haven't kept pace, so your model's performance quietly degrades month by month.

Most companies now run multiple AI models: one for intent classification, another for sentiment, another for entity extraction. Each model was trained by different teams, at different times, using different definitions for overlapping concepts.

Result: Your chatbot's intent classifier says the customer wants "billing_information" but your entity extractor can't find the relevant billing details because it was trained with a different definition of what constitutes "billing information."

Many teams think having human reviewers validate AI outputs solves the consistency problem. It doesn't - it just moves the inconsistency to a different stage. If your human reviewers don't share clear definitions, they'll override the AI's decisions arbitrarily, creating yet another layer of conflicting ground truth.

The fix isn't more data. It's not a better model architecture. It's not even more compute power.

The fix is treating definitions as a first-class engineering artifact.

Here's what that looks like in practice:

Before a single piece of training data gets annotated, create clear, documented definitions for every label in your taxonomy.

Poor definition:

Strong definition:

Treat definitions like code. When business requirements change, create a new version. Track what changed and when.

Definition History:

urgent_request v1.0 (Jan 2024): Customer states need for immediate resolution

urgent_request v1.1 (Mar 2024): Added premium tier criteria

urgent_request v2.0 (Jun 2024): Separated time-based urgency from business-impact urgency

Now when model performance changes, you can trace it back to specific definition updates. You can even maintain separate models trained on different definition versions for A/B testing.

When your chatbot makes mistakes in production, don't just retrain on new examples. Ask: "Is this a model failure or a definition failure?"

Production error analysis:

Most teams only fix the 40%. The real wins come from fixing the 60%.

Your chatbot doesn't exist in isolation. Customer support agents use their own definitions. Product teams have their own taxonomy. Marketing has yet another set of terms.

When these definitions don't align, every handoff from AI to human (or human to AI) becomes a translation problem.

Example misalignment:

One word, three completely different meanings across teams. Your chatbot will constantly misroute issues because it was trained on a definition that doesn't match how humans actually work.

Here's your action plan to fix the definition crisis in your AI training pipeline:

Week 1: Audit

Week 2: Define

Week 3: Validate

Week 4: Implement

Ongoing: Maintain

Your AI chatbot is only as good as the definitions it was trained on. You can have the most sophisticated architecture, the largest training dataset, and the most powerful infrastructure - but if your training data was labeled using inconsistent, ambiguous, or outdated definitions, your chatbot will never reliably do what you need it to do.

The companies winning with AI aren't necessarily the ones with the most data or the biggest models. They're the ones who've solved the unglamorous but critical problem of definitional consistency.

Because in AI, like in everything else, you can't build something solid on a foundation of ambiguity.

Ready to build your AI on solid ground? Definable.ai helps teams create, maintain, and operationalize consistent definitions across their entire AI training pipeline. From initial labeling to production monitoring, ensure every decision is based on clear, shared understanding.

Request a demo to see how leading AI teams are eliminating the hidden costs of undefined terms.